Optimization using the ProblemInterface class

This notebook will show the basics of setting up a resilience optimization problem with the Problem class in fmdtools.sim.search module.

Copyright © 2024, United States Government, as represented by the Administrator of the National Aeronautics and Space Administration. All rights reserved.

The “"Fault Model Design tools - fmdtools version 2"” software is licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0.

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

from pump_stochastic import Pump

Problem Setup

The search module can be used to define an optimization problem around and fmdtools model/simulation in terms of variables, objectives, and constraints.

Different classes enable the optimization of faults, disturbances, and parameters. Below we define a DisturbanceProblem, which will optimize the s.eff state in the move_water function at time t=20

Note that if all objectives and constraints are sampled in time before the defined simulation end-point, it will finish before completion to save computational time.

from fmdtools.sim.search import DisturbanceProblem

class PumpDisturbanceProblem(DisturbanceProblem):

def init_problem(self, **kwargs):

# Defining sim to optimize over

self.add_sim(Pump(), 20, "move_water.r.s.eff", faultseq={14: {"import_water": "less_wat"}})

# In this case, we are optimizing the total flow in the `MoveWater` function as read at time t=25.

self.add_result_objective("f1", "move_water.s.total_flow", time=25, negative=True)

# We can additionally add constraints to the problem, in this case pressure as read at t=25.

self.add_result_constraint('g1', "wat_2.s.pressure", time=25, threshold=20, comparator='less', negative=True)

We can instantiate a new problem to optimize using:

new_problem = PumpDisturbanceProblem()

new_problem

PumpDisturbanceProblem with:

VARIABLES

-move_water.r.s.eff nan

OBJECTIVES

-f1 nan

CONSTRAINTS

-g1 nan

The string representation of the problem shows how the objectives/constraints have been set up, that reflects:

the form of the objectives as positive or negative for maximization/minimization (set with the

metricparameter inadd_objective).the form of the constraints as positive or negative based on the

thresholdparameter inadd_constraintand overallnegativekwargs.

These parameters will need to be adjusted depending on whether the interfacing optimization package is set up for minimization or maximization or in positive or negative null form (where feasible means positive or negative constraint values).

Problem interfaces

Now that this problem is set up, we now have interfaces which can be passed to optimization methods. These are methods which match the names of the objectives/constraints defined earlier which can be passed as callables to optimizaiton methods.

new_problem.f1(1)

new_problem.f1(0.5)

new_problem.f1(1.5)

new_problem.f1(2)

new_problem.f1(0.3)

np.float64(-5.039999999999998)

new_problem.f1(15)

np.float64(-31.5)

new_problem.res

t25p0.move_water.s.total_flow: 31.5

t25p0.wat_2.s.pressure: 150.0

Note that despite being different callables, to reduce simulation costs, obj_1 and con_1 only simulate the model when a new variable value is entered into the problem. This can be seen by looking at the current_iter repr, which shows the values of the objectives/constraints a the current variable value.

new_problem

PumpDisturbanceProblem with:

VARIABLES

-move_water.r.s.eff 15.0000

OBJECTIVES

-f1 -31.5000

CONSTRAINTS

-g1 -130.0000

Additionally, we can look at the iter_hist iteration history for the problem.

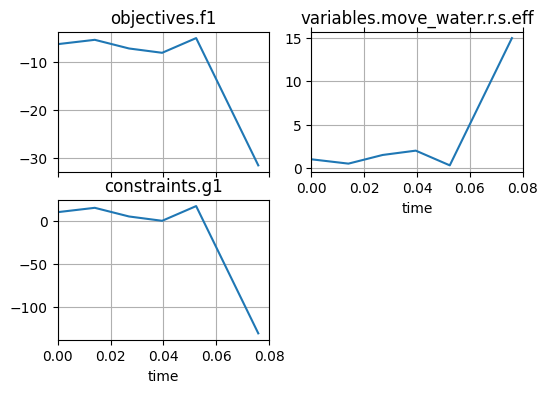

fig, ax = new_problem.iter_hist.plot_line('objectives.f1', 'variables.move_water.r.s.eff',

'constraints.g1')

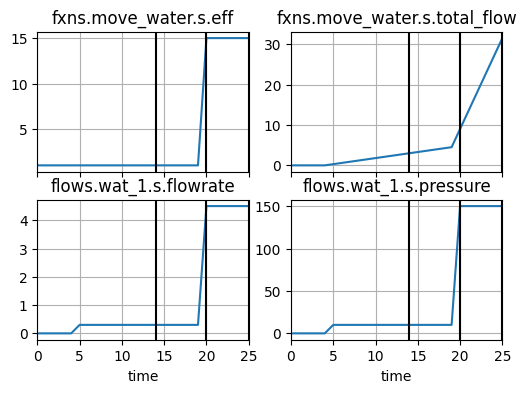

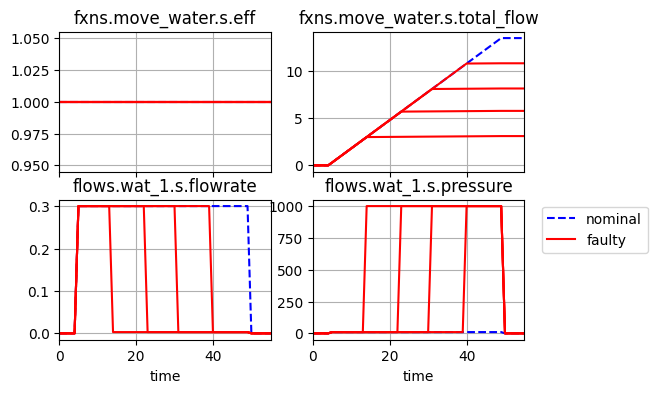

As well as the actual history of the sim at the current variable value:

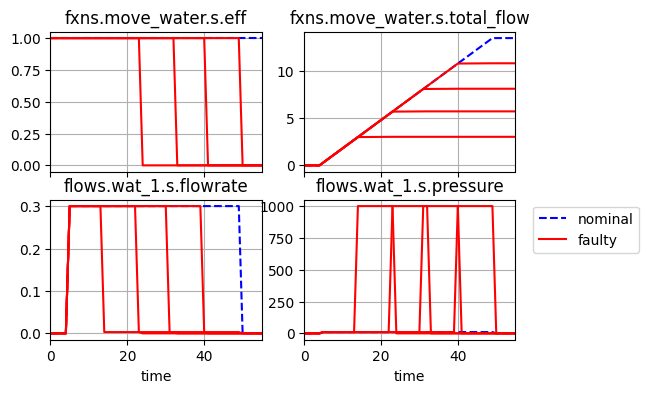

fig, ax = new_problem.hist.plot_line('fxns.move_water.s.eff', 'fxns.move_water.s.total_flow', 'flows.wat_1.s.flowrate', 'flows.wat_1.s.pressure',

time_slice=[14, 20, 25])

Optimization:

Now, we will demonstrate optimization using this problem as it is set up:

from scipy.optimize import minimize

new_problem = PumpDisturbanceProblem()

con_list = [{'type': 'ineq', 'fun':getattr(new_problem, con)} for con in new_problem.constraints]

Note that scipy minimize assumes that:

objectives are to be minimized, and

constraints must be held positive

The problem should thus be set up to accomodate this, by adjusting whether negative=True is sent to add_result_objective or add_result_constraint/

res = minimize(new_problem.f1, [1], constraints=con_list)

res

message: Optimization terminated successfully

success: True

status: 0

fun: -8.099999999999998

x: [ 2.000e+00]

nit: 2

jac: [-1.800e+00]

nfev: 4

njev: 2

multipliers: [ 1.800e-01]

As shown, the variables are optimized to a value of x=4.0, the maximum possible value of MoveWater.eff which was put in the problem. We can further verify the optimized result by looking at the problem:

new_problem

PumpDisturbanceProblem with:

VARIABLES

-move_water.r.s.eff 2.0000

OBJECTIVES

-f1 -8.1000

CONSTRAINTS

-g1 -0.0000

new_problem.g1(2)

np.float64(-0.0)

new_problem.iter_hist

time: array(10)

iter: array(10)

variables:

--move_water.r.s.eff: array(10)

objectives:

--f1: array(10)

constraints:

--g1: array(10)

As shown, the bound set constraint x>0 is active at the found minimum, as we would expect.

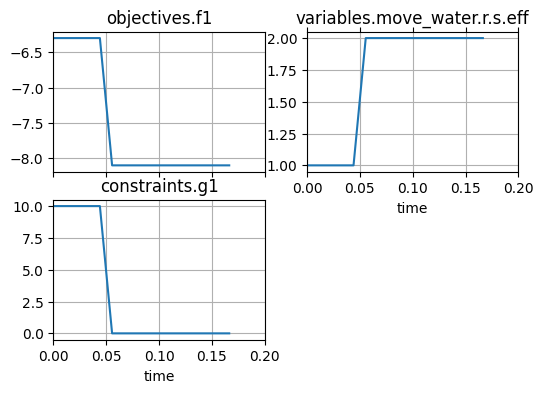

We can further visualize this solution using:

fig, ax = new_problem.iter_hist.plot_line('objectives.f1', 'variables.move_water.r.s.eff',

'constraints.g1')

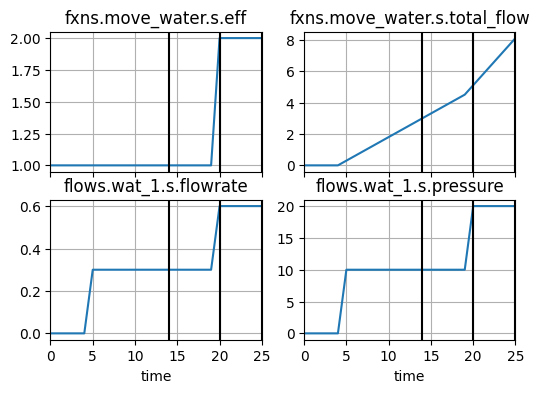

fig, ax = new_problem.hist.plot_line('fxns.move_water.s.eff', 'fxns.move_water.s.total_flow',

'flows.wat_1.s.flowrate', 'flows.wat_1.s.pressure',

time_slice=[14, 20, 25])

new_problem.variables['move_water.r.s.eff']

2

As shown, the constraint g1 is active, meaning the optimal presure at t=20 is just at the threshold of 20. This corresponds to the optimized MoveWater.eff value of 2.0.

assert abs(new_problem.constraints['g1'].value) < 0.001

assert abs(new_problem.variables['move_water.r.s.eff'] - 2.0) < 0.001

Multi-scenario Optimization

In addition to optimizing over single-scenarios ParameterSimProblem can be used to optimize over lists of scenarios from an FaultSample.

Here we define a slightly different problem, where instead of optimizing variable changes at specific times (e.g., faults), we instead optimize the model parameter delay, which changes how long of a delay there is before a fault when there is adverse pressure.

To see the effect of this accross scenarios, we first define a FaultSample:

from fmdtools.sim.sample import FaultDomain, FaultSample

fd = FaultDomain(Pump())

fd.add_all_fxn_modes("export_water")

fs = FaultSample(fd)

fs.add_fault_phases("on", args=(4,))

fs

FaultSample of scenarios:

- pump_fxns_export_water_block_t14p0

- pump_fxns_export_water_block_t23p0

- pump_fxns_export_water_block_t31p0

- pump_fxns_export_water_block_t40p0

We also need to define a ParameterDomain for the delay parameter to optimize:

from fmdtools.sim.sample import ParameterDomain

from examples.pump.pump_stochastic import PumpParam

pd = ParameterDomain(PumpParam)

pd.add_variables("delay")

pd(4)

PumpParam(cost=('repair', 'water'), delay=4)

We can now set up the ParameterSimProblem:

Our objective for this problem will be to minimize the cost model overall scenarios that is defined in mdl.classify.

from fmdtools.sim.search import ParameterSimProblem

class PumpParameterSimProblem(ParameterSimProblem):

def init_problem(self, **kwargs):

self.add_sim(Pump(), 'fault_sample', fs)

self.add_parameterdomain(pd)

self.add_result_objective("f1", 'classify.expected_cost')

psp = PumpParameterSimProblem()

We can then verify the problem setup. Note that no constraints will be used in this problem:

psp

psp.f1(15)

np.float64(13894.0625)

psp.f1(10)

np.float64(15152.5)

fig, ax = psp.hist.plot_line('fxns.move_water.s.eff', 'fxns.move_water.s.total_flow',

'flows.wat_1.s.flowrate', 'flows.wat_1.s.pressure')

psp.res

nominal.tend.classify.expected_cost: 0.0

pump_fxns_export_wat 4519.375000000001

pump_fxns_export_wat 4013.125

pump_fxns_export_wat 3563.125

pump_fxns_export_wat 3056.875

We may now minimize the objective function:

res = minimize(psp.f1, [5], method="SLSQP", bounds=[[0,40]])

res

message: Optimization terminated successfully

success: True

status: 0

fun: 15163.75

x: [ 5.000e+00]

nit: 1

jac: [ 0.000e+00]

nfev: 2

njev: 1

multipliers: []

res = minimize(psp.f1, [5], method="nelder-mead")

res

message: Optimization terminated successfully.

success: True

status: 0

fun: 15163.75

x: [ 5.000e+00]

nit: 13

nfev: 38

final_simplex: (array([[ 5.000e+00],

[ 5.000e+00]]), array([ 1.516e+04, 1.516e+04]))

Interestingly enough, while the optimizer gives a “optimization terminated successfully,” it stays at the initial point. This may be because of a poor fit of oftimization method. See:

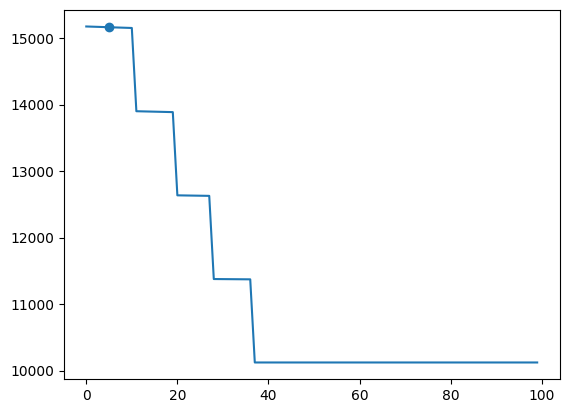

objs = [psp.f1(i) for i in range(100)]

from matplotlib import pyplot as plt

plt.plot(objs)

plt.scatter(res.x, res.fun)

<matplotlib.collections.PathCollection at 0x1b070673770>

As shown, the objective appears to be non-differentiable, with several plateaus between the starting point (20) and the minimum. Since the SLSQP solver is a gradient-based solver, it probably sees the gradient as 0 at this point, making it think the result is already an optimum.

While many different optimization packages exist, one of the more-developed ones is the pymoo package (see reference). Below we show how to interface with pymoo to use a solver that will find the optimal solution.

# import pip

# pip.main(['install', 'pymoo'])

Below we set the problem up as a pymoo problem object which can be used with a pymoo algorithm per the documentation. Note that this object corresponds directly (that is, is linked) to the original problem (see below).

from pymoo.core.problem import ElementwiseProblem

class Prob(ElementwiseProblem):

def __init__(self, problem_inter):

self.problem_inter = problem_inter

super().__init__(n_var=1, n_obj=1, n_con=0.0, xl=0.0, xu=40)

def _evaluate(self, x, out, *args, **kwargs):

out["F"] = self.problem_inter.f1(x)

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

Cell In[37], line 1

----> 1 from pymoo.core.problem import ElementwiseProblem

2 class Prob(ElementwiseProblem):

3 def __init__(self, problem_inter):

ModuleNotFoundError: No module named 'pymoo'

We can then optimize with a PatternSearch:

from pymoo.optimize import minimize

from pymoo.algorithms.soo.nonconvex.pattern import PatternSearch

import numpy as np

algorithm=PatternSearch(x0=np.array([5]))

psp_pymoo = PumpParameterSimProblem()

pymoo_prob = Prob(psp_pymoo)

res = minimize(pymoo_prob, algorithm, verbose=True)

=================================================

n_gen | n_eval | f_avg | f_min

=================================================

1 | 1 | 1.516375E+04 | 1.516375E+04

2 | 2 | 1.515812E+04 | 1.515250E+04

3 | 4 | 1.389434E+04 | 1.263619E+04

4 | 6 | 1.200500E+04 | 1.137381E+04

5 | 9 | 1.074853E+04 | 1.012325E+04

6 | 12 | 1.012325E+04 | 1.012325E+04

7 | 14 | 1.012325E+04 | 1.012325E+04

8 | 16 | 1.012325E+04 | 1.012325E+04

9 | 18 | 1.012325E+04 | 1.012325E+04

10 | 20 | 1.012325E+04 | 1.012325E+04

11 | 22 | 1.012325E+04 | 1.012325E+04

12 | 24 | 1.012325E+04 | 1.012325E+04

13 | 26 | 1.012325E+04 | 1.012325E+04

14 | 28 | 1.012325E+04 | 1.012325E+04

15 | 30 | 1.012325E+04 | 1.012325E+04

res.X

array([40.])

res.F

array([10123.25])

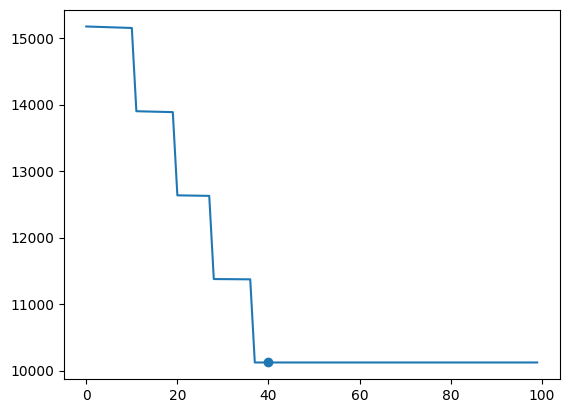

plt.plot(objs)

plt.scatter(res.X, res.F)

<matplotlib.collections.PathCollection at 0x2470f3adb90>

psp_pymoo.iter_hist

time: array(28)

iter: array(28)

variables:

--delay: array(28)

objectives:

--f1: array(28)

As shown, this method more capably finds the minimum in this case, in part because the underlying search algorithm (Hooke and Jeeves Pattern Search) is more robust to this type of problem.

We can visualize the results of this problem by looking at the simulation log:

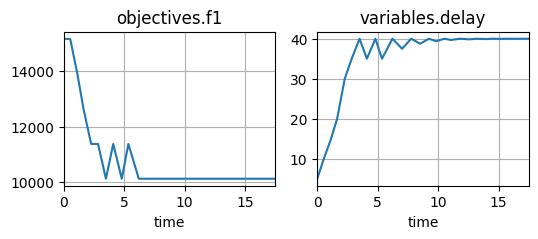

fig, ax = psp_pymoo.iter_hist.plot_line('objectives.f1', 'variables.delay')

As well as the history:

fig, ax = psp_pymoo.hist.plot_line('fxns.move_water.s.eff', 'fxns.move_water.s.total_flow',

'flows.wat_1.s.flowrate', 'flows.wat_1.s.pressure')

As shown, the main difference is that at the initial point, the short delay causes the pump to break during the time of the simulation, while at a long delay, the pump breaks later in the simulation. The optimum is at t=40 since this is the first feasible point where three scenarios no longer result in a mechanical break of the pump.