1. Introduction

This document describes F Prime Prime, also known as FPP or F Double Prime. FPP is a modeling language for the F Prime flight software framework. For more detailed information about F Prime, see the F Prime User Manual.

The goals of FPP are as follows:

-

To provide a modeling language for F Prime that is simple, easy to use, and well-tailored to its purpose.

-

To provide semantic checking and error reporting for F Prime models.

-

To generate code in the various languages that F Prime uses, e.g., C++ and JSON. In this document, we will call these languages the target languages.

Developers may combine code generated from FPP with code written by hand to create, e.g., deployable flight software (FSW) programs and ground data environments.

The name “F Double Prime” (or F″) deliberately suggests the idea of a “derivative” of F Prime (or F′). By “integrating” an FPP model (i.e., running the tools) you get a partial FSW implementation in the F Prime framework; and then by “integrating” again (i.e., providing the project-specific C++ implementation) you get a FSW application.

Purpose: The purpose of this document is to describe FPP in a way that is accessible to users, including beginning users. A more detailed and precise description is available in The FPP Language Specification. We recommend that you read this document before consulting that one.

Overview: The rest of this document proceeds as follows. Section 2 explains how to get up and running with FPP. Sections 3 through 12 describe the elements of an FPP model, starting with the simplest elements (constants and types) and working towards the most complex (components and topologies). Section 13 explains how to specify a model as a collection of files: for example, it covers the management of dependencies between files. Section 14 explains how to analyze FPP models and how to translate FPP models to C++ and JSON. It also explains how to translate an XML format previously used by F Prime into FPP. Section 15 explains how to write a C++ implementation against the code generated from an FPP model.

2. Installing FPP

Before reading the rest of this document, you should install the latest version of FPP. The installation instructions are available here:

Make sure that the FPP command-line tools are in your shell path.

For example, running fpp-check on the command line should succeed and should

prompt for standard input. You can type control-C to end

the program:

% fpp-check ^C %

fpp-check is the tool for checking that an FPP model is valid.

Like most FPP tools,

fpp-check reads either from named files or from standard input.

If one or more files are named on the command line, fpp-check reads those;

otherwise it reads from standard input.

As an example, the following two operations are equivalent:

% fpp-check < file.fpp % fpp-check file.fpp

The first operation redirects file.fpp into the standard input of

fpp-check.

The second operation names file.fpp as an input file of fpp-check.

Most of the examples in the following sections are complete FPP models.

You can run the models through

fpp-check by typing or pasting them into a file or into standard input.

We recommend that you to this for at least a few of the examples,

to get a feel for how FPP works.

3. Defining Constants

The simplest FPP model consists of one or more constant definitions. A constant definition associates a name with a value, so that elsewhere you can use the name instead of re-computing or restating the value. Using named constants makes the model easier to understand (the name says what the value means) and to maintain (changing a constant definition is easy; changing all and only the relevant uses of a repeated value is not).

This section covers the following topics:

-

Writing an FPP constant definition.

-

Writing an expression, which is the source text that defines the value associated with the constant definition.

-

Writing multiple constant definitions.

-

Writing a constant definition that spans two or more lines of source text.

3.1. Writing a Constant Definition

To write a constant definition, you write the keyword constant,

an equals sign, and an expression.

A later section

describes all the expressions you can write.

Here is an example that uses an integer literal expression representing

the value 42:

constant ultimateAnswer = 42This definition associates the name ultimateAnswer with the value 42.

Elsewhere in the FPP model you can use the name ultimateAnswer to represent

the value.

You can also generate a C++ header file that defines the C++ constant

ultimateAnswer and gives it the value 42.

As an example, do the following:

-

On the command line, run

fpp-check. -

When the prompt appears, type the text shown above, type return, type control-D, and type return.

You should see something like the following on your console:

% fpp-check constant ultimateAnswer = 42 ^D %

As an example of an incorrect model that produces an error message, repeat the exercise, but omit the value 42. You should see something like this:

% fpp-check constant ultimateAnswer = ^D fpp-check stdin: end of input error: expression expected

Here the fpp-check tool is telling you that it could not parse the input:

the input ended where it expected an expression.

3.2. Names

Names in FPP follow the usual rules for identifiers in a programming language:

-

A name must contain at least one character.

-

A name must start with a letter or underscore character.

-

The characters after the first may be letters, numbers, or underscores.

For example:

-

name,Name,_name, andname1are valid names. -

1invalidis not a valid name, because names may not start with digits.

3.2.1. Reserved Words

Certain sequences of letters such as constant are called out as reserved

words (also called keywords) in FPP.

Each reserved word has a special meaning, such as introducing a constant

declaration.

The FPP Language Specification has a complete list of reserved words.

In this document, we will introduce reserved words as needed to explain

the language features.

Using a reserved word as a name in the ordinary way causes a parsing error. For example, this code is incorrect:

constant constant = 0To use a reserved word as a name, you must put the character $ in

front of it with no space.

For example, this code is legal:

constant $constant = 0The character sequence $constant represents the name constant,

as opposed to the keyword constant.

You can put the character $ in front of any identifier,

not just a keyword.

If the identifier is not a keyword, then the $ has no effect.

For example, $name has the same meaning as name.

3.2.2. Name Clashes

FPP will not let you define two different symbols of the same kind with the same name. For example, this code will produce an error:

constant c = 0

constant c = 1Two symbols can have the same unqualified name if they reside in different modules or enums; these concepts are explained below. Two symbols can also have the same name if the analyzer can distinguish them based on their kinds. For example, an array type (described below) and a constant can have the same name, but an array type and a struct type may not. The FPP Language Specification has all the details.

3.3. Expressions

This section describes the expressions that you can write as part of a constant definition. Expressions appear in other FPP elements as well, so we will refer back to this section in later sections of the manual.

3.3.1. Primitive Values

A primitive value expression represents a primitive machine value, such as an integer. It is one of the following:

-

A decimal integer literal value such as

1234. -

A hexadecimal integer literal value such as

0xABCDor0xabcd. -

A floating-point literal value such as

12.34or1234e-2. -

A Boolean literal expression

trueorfalse.

As an exercise, construct some constant definitions with primitive values as their

expressions, and

feed the results to fpp-check.

For example:

constant a = 1234

constant b = 0xABCDIf you get an error, make sure you understand why.

3.3.2. String Values

A string value represents a string of characters. There are two kinds of string values: single-line strings and multiline strings.

Single-line strings:

A single-line string represents a string of characters

that does not contain a newline character.

It is written as a string of characters enclosed in double quotation

marks ".

For example:

constant s = "This is a string."To put the double-quote character in a string, write the double quote

character as \", like this:

constant s = "\"This is a quotation within a string,\" he said."To encode the character \ followed by the character ", write

the backslash character as \\, like this:

constant s = "\\\""This string represents the literal character sequence \, ".

In general, the sequence \ followed by a character c

is translated to c.

This sequence is called an escape sequence.

Multiline strings:

A multiline string represents a string of characters

that may contain a newline character.

It is enclosed in a pair of sequences of three double quotation

marks """.

For example:

constant s = """

This is a multiline string.

It has three lines.

"""When interpreting a multiline string, FPP ignores any newline

characters at the start and end of the string.

FPP also ignores any blanks to the left of the column where

the first """ appears.

For example, the string shown above consists of three lines and starts

with This.

Literal quotation marks are allowed inside a multiline string:

constant s = """

"This is a quotation within a string," he said.

"""Escape sequences work as for single-line strings. For example:

constant s = """

Here are three double-quote characters in a row: \"\"\"

"""3.3.3. Array Values

An array value expression represents a fixed-size array of values. To write an array value expression, you write a comma-separated list of one or more values (the array elements) enclosed in square brackets. Here is an example:

constant a = [ 1, 2, 3 ]This code associates the name a with the array of

integers

[ 1, 2, 3 ].

As mentioned in the introduction, an FPP model describes the structure of a FSW application; the computations are specified in a target language such as C++. As a result, FPP does not provide an array indexing operation. In particular, it does not specify the index of the leftmost array element; that is up to the target language. For example, if the target language is C++, then array indices start at zero.

Here are some rules for writing array values:

-

An array value must have at least one element. That is,

[]is not a valid array value. -

The types of the elements must match. For example, the following code is illegal, because the value

1(which has typeInteger) and the value"abcd"(which has typestring) are incompatible:constant mismatch = [ 1, "abcd" ]Try entering this example into

fpp-checkand see what happens.

What does it mean for types to match? The FPP Specification has all the details, and we won’t attempt to repeat them here. In general, things work as you would expect: for example, we can convert an integer value to a floating-point value, so the following code is allowed:

constant a = [ 1, 2.0 ]It evaluates to an array of two floating-point values.

If you are not sure whether a type conversion is allowed, you can

ask fpp-check.

For example: can we convert a Boolean value to an integer value?

In older languages like C and C++ we can, but in many newer languages

we can’t. Here is the answer in FPP:

% fpp-check

constant a = [ 1, true ]

^D

fpp-check

stdin: 1.16

constant a = [ 1, true ]

^

error: cannot compute common type of Integer and bool

So no, we can’t.

Here are two more points about array values:

-

Any legal value can be an element of an array value, so in particular arrays of arrays are allowed. For example, this code is allowed:

constant a = [ [ 1, 2 ], [ 3, 4 ] ]It represents an array with two elements: the array

[ 1, 2 ]and the array[ 3, 4 ]. -

To avoid repeating values, a numeric, string, or Boolean value is automatically promoted to an array of appropriate size whenever necessary to make the types work. For example, this code is allowed:

constant a = [ [ 1, 2, 3 ], 0 ]It is equivalent to this:

constant a = [ [ 1, 2, 3 ], [ 0, 0, 0 ] ]

3.3.4. Array Elements

An array element expression represents an element of an array value. To write an array element expression, you write an array value followed by an index expression enclosed in square brackets. The index expression must resolve to a number. The number specifies the index of the array element, where the array indices start at zero.

Here is an example:

constant a = [ 1, 2, 3 ]

constant b = a[1]In this example, the constant b has the value 2.

The index expression must resolve to a number that is in range

for the array.

For example, this code is incorrect, because "hello" is not a number:

constant a = [ 1, 2, 3 ]

constant b = a["hello"]This code is incorrect because 3 is not in the range [0, 2]:

constant a = [ 1, 2, 3 ]

constant b = a[3]3.3.5. Struct Values

A struct value expression represents a C- or C++-style structure, i.e., a mapping of names to values. To write a struct value expression, you write a comma-separated list of zero or more struct members enclosed in curly braces. A struct member consists of a name, an equals sign, and a value.

Here is an example:

constant s = { x = 1, y = "abc" }This code associates the name s with a struct value.

The struct value has two members x and y.

Member x has the integer value 1, and member y has the string value "abc".

The order of members: When writing a struct value, the order in which the

members appear does not matter.

For example, in the following code, constants s1 and s2 denote the same

value:

constant s1 = { x = 1, y = "abc" }

constant s2 = { y = "abc", x = 1 }The empty struct: The empty struct is allowed:

constant s = {}Arrays in structs: You can write an array value as a member of a struct value. For example, this code is allowed:

constant s = { x = 1, y = [ 2, 3 ] }Structs in arrays: You can write a struct value as a member of an array value. For example, this code is allowed:

constant a = [ { x = 1, y = 2 }, { x = 3, y = 4 } ]This code is not allowed, because the element types don’t match — an array is not compatible with a struct.

constant a = [ { x = 1, y = 2 }, [ 3, 4 ] ]However, this code is allowed:

constant a = [ { x = 1, y = 2 }, { x = 3 } ]Notice that the first member of a is a struct with two members x and y.

The second member of a is also a struct, but it has only one member x.

When the FPP analyzer detects that a struct type is missing a member,

it automatically adds the member, giving it a default value.

The default values are the ones you would expect: zero for numeric members, the empty

string for string members, and false for Boolean members.

So the code above is equivalent to the following:

constant a = [ { x = 1, y = 2 }, { x = 3, y = 0 } ]3.3.6. Struct Members

A struct member expression represents a member of a struct value. To write a struct member expression, you write a struct value followed by a dot and the member name. For example:

constant a = { x = 1, y = [ 2, 3 ] }

constant b = a.yHere a is a struct value with members x and y

The constant b has the array value [ 2, 3 ], which is the

value stored in member y of a.

This code is incorrect because a is not a struct value:

constant a = 0

constant b = a.yThis code is incorrect because z is not a member of a:

constant a = { x = 1, y = [ 2, 3 ] }

constant b = a.z3.3.7. Name Expressions

A name expression is a use of a name appearing in a constant definition. It stands for the associated constant value. For example:

constant a = 1

constant b = aIn this code, constant b has the value 1.

The order of definitions does not matter, so this code is equivalent:

constant b = a

constant a = 1The only requirement is that there may not be any cycles in the graph

consisting of constant definitions and their uses.

For example, this code is illegal, because there is a cycle from a to b to

c and back to a:

constant a = c

constant b = a

constant c = bTry submitting this code to fpp-check, to see what happens.

Names like a, b, and c are simple or unqualified names.

Names can also be qualified: for example A.a is allowed.

We will discuss qualified names further when we introduce

module definitions and enum definitions below.

3.3.8. Value Arithmetic Expressions

A value arithmetic expression performs arithmetic on values. It is one of the following:

-

A negation expression, for example:

constant a = -1 -

A binary operation expression, where the binary operation is one of

+(addition),-(subtraction),*(multiplication), and/(division). For example:constant a = 1 + 2 -

A parenthesis expression, for example:

constant a = (1)

The following rules apply to arithmetic expressions:

-

The subexpressions must be integer or floating-point values.

-

If there are any floating-point subexpressions, then the entire expression is evaluated using 64-bit floating-point arithmetic.

-

Otherwise the expression is evaluated using arbitrary-precision integer arithmetic.

-

In a division operation, the second operand may not be zero or (for floating-point values) very close to zero.

3.3.9. Compound Expressions

Wherever you can write a value inside an expression, you can write a more complex expression there, so long as the types work out. For example, these expressions are valid:

constant a = (1 + 2) * 3

constant b = [ 1 + 2, 3 ]The first example is a binary expression whose first operand is a parentheses expression; that parentheses expression in turn has a binary expression as its subexpression. The second example is an array expression whose first element is a binary expression.

This expression is invalid, because 1 + 2.0 evaluates to a floating-point

value, which is incompatible with type string:

constant a = [ 1 + 2.0, "abc" ]Compound expressions are evaluated in the obvious way. For example, the constant definitions above are equivalent to the following:

constant a = 9

constant b = [ 3, 3 ]For compound arithmetic expressions, the precedence and associativity rules are the usual ones (evaluate parentheses first, then multiplication, and so forth).

3.4. Multiple Definitions and Element Sequences

Typically you want to specify several definitions in a model source file, not just one. There are two ways to do this:

-

You can separate the definitions by one or more newlines, as shown in the examples above.

-

You can put the definitions on the same line, separated by a semicolon.

For example, the following two code excerpts are equivalent:

constant a = 1

constant b = 2constant a = 1; constant b = 2More generally, a collection of several constant definitions is an example of an element sequence, i.e., a sequence of similar syntactic elements. Here are the rules for writing an element sequence:

-

Every kind of element sequence has optional terminating punctuation. The terminating punctuation is either a semicolon or a comma, depending on the kind of element sequence. For constant definitions, it is a semicolon.

-

When writing elements on separate lines, the terminating punctuation is optional.

-

When writing two or more elements on the same line, the terminating punctuation is required between the elements and optional after the last element.

3.5. Multiline Definitions

Sometimes, especially for long definitions, it is useful to split a definition across two or more lines. In FPP there are several ways to do this.

First, FPP ignores newlines that follow opening symbols like [ and precede

closing symbols like ].

For example, this code is allowed:

constant a = [

1, 2, 3

]Second, the elements of an array or struct form an element sequence (see the previous section), so you can write each element on its own line, omitting the commas if you wish:

constant s = {

x = 1

y = 2

z = 3

}This is a clean way to write arrays and structs.

The assignment of each element to its own line and the lack of

terminating punctuation

make it easy to rearrange the elements.

In particular, one can do a line-by-line sort on the elements (for example, to

sort struct members alphabetically by name) without concern for messing up the

arrangement of commas.

If we assume that the example represents the first five lines of a source file,

then in vi this is easily done as :2-4!sort.

Third, FPP ignores newlines that follow connecting symbols such as = and +

For example, this code is allowed;

constant a =

1

constant b = 1 +

2Finally, you can always create an explicit line continuation by escaping

one or more newline characters with \:

constant \

a = 1Note that in this example you need the explicit continuation, i.e., this code is not legal:

constant

a = 13.6. Framework Constants

Certain constants defined in FPP have a special meaning in the

F Prime framework.

These constants are called framework constants.

For example, the constant FW_FIXED_LENGTH_STRING_SIZE

defines the default maximum size of a string.

(We will define string types

in a later section of this

manual.)

You typically set these constants by overriding configuration

files provided in the directory default/config in the F Prime repository.

For example, the file default/config/FpConstants.fpp provides a default

definition for FW_FIXED_LENGTH_STRING_SIZE.

You can override this default value by a different definition for this

constant.

The

F

Prime User Manual

explains how to do this configuration.

The FPP analyzer does not require that framework constants be defined

unless they are used.

For example, the following model is valid, because it neither defines nor users

FW_FIXED_LENGTH_STRING_SIZE:

constant a = 0The following model is valid because it defines and uses FW_FIXED_LENGTH_STRING_SIZE:

constant FW_FIXED_LENGTH_STRING_SIZE = 80

constant a = FW_FIXED_LENGTH_STRING_SIZEThe following model is invalid, because it uses FW_FIXED_LENGTH_STRING_SIZE

without defining it:

constant a = FW_FIXED_LENGTH_STRING_SIZEIf framework constants are defined in the FPP model, then

then they must conform to certain rules.

These rules are spelled out in detail in the

The

FPP Language Specification.

For example, FW_FIXED_LENGTH_STRING_SIZE must have an integer type.

So this model is invalid:

constant FW_FIXED_LENGTH_STRING_SIZE = "abc"Here is what happens when you run this model through fpp-check:

% fpp-check constant FW_FIXED_LENGTH_STRING_SIZE = "abc" ^D fpp-check stdin:1.1 constant FW_FIXED_LENGTH_STRING_SIZE = "abc" ^ error: the F Prime framework constant FW_FIXED_LENGTH_STRING_SIZE must have an integer type

4. Writing Comments and Annotations

In FPP, you can write comments that are ignored by the parser. These are just like comments in most programming languages. You can also write annotations that have no meaning in the FPP model but are attached to model elements and may be carried through to translation — for example, they may become comments in generated C++ code.

4.1. Comments

A comment starts with the character # and goes to the end of the line.

For example:

# This is a commentTo write a comment that spans multiple lines, start each line with #:

# This is a comment.

# It spans two lines.4.2. Annotations

Annotations are attached to elements of a model, such as constant definitions. A model element that may have an annotation attached to it is called an annotatable element. Any constant definition is an annotatable element. Other annotatable elements will be called out in future sections of this document.

There are two kinds of annotations: pre annotations and post annotations:

-

A pre annotation starts with the character

@and is attached to the annotatable element that follows it. -

A post annotation starts with the characters

@<and is attached to the annotatable element that precedes it.

In either case

-

Any white space immediately following the

@or@<characters is ignored. -

The annotation goes to the end of the line.

For example:

@ This is a pre annotation

constant c = 0 @< This is a post annotationMultiline annotations are allowed. For example:

@ This is a pre annotation.

@ It has two lines.

constant c = 0 @< This is a post annotation.

@< It also has two lines.The meaning of the annotations is tool-specific. A typical use is to

concatenate the pre and post annotations into a list of lines and emit them as

a comment. For example, if you send the code immediately above through the

tool fpp-to-cpp,

it should generate a file FppConstantsAc.hpp. If you examine that file,

you should see, in relevant part, the following code:

//! This is a pre annotation.

//! It has two lines.

//! This is a post annotation.

//! It also has two lines.

enum FppConstant_c {

c = 0

};The two lines of the pre annotation and the two lines of the post annotation have been concatenated and written out as a Doxygen comment attached to the constant definition, represented as a C++ enum.

In the future, annotations may be used to provide additional capabilities, for example timing analysis, that are not part of the FPP language specification.

5. Defining Modules

In an FPP model, a module is a group of model elements that are all qualified with a name, called the module name. An FPP module corresponds to a namespace in C++ and a module in Python. Modules are useful for (1) organizing a large model into a hierarchy of smaller units and (2) avoiding name clashes between different units.

To define a module, you write the keyword module followed by one

or more definitions enclosed in curly braces.

For example:

module M {

constant a = 1

}The name of a module qualifies the names of all the definitions that the module

encloses.

To write the qualified name, you write the qualifier, a dot, and the base name:

for example M.a. (This is also the way that

name qualification works in Python, Java, and Scala.)

Inside the module, you can use the qualified name or the unqualified

name.

Outside the module, you must use the qualified name.

For example:

module M {

constant a = 1

constant b = a # OK: refers to M.a

constant c = M.b

}

constant a = M.a

constant c = b # Error: b is not in scope hereAs with namespaces in C++, you can close a module definition and reopen it later. All the definitions enclosed by the same name go in the module with that name. For example, the following code is allowed:

module M {

constant a = 0

}

module M {

constant b = 1

}It is equivalent to this code:

module M {

constant a = 0

constant b = 1

}You can define modules inside other modules. When you do that, the name qualification works in the obvious way. For example:

module A {

module B {

constant c = 0

}

}

constant c = A.B.cThe inside of a module definition is an element sequence with a semicolon as the optional terminating punctuation. For example, you can write this:

module M { constant a = 0; constant b = 1 }; constant c = M.aA module definition is an annotatable element, so you can attach annotations to it, like this:

@ This is module M

module M {

constant a = 0

}6. Defining Types

An FPP model may include one or more type definitions. These definitions describe named types that may be used elsewhere in the model and that may generate code in the target language. For example, an FPP type definition may become a class definition in C++.

There are four kinds of type definitions:

-

Array type definitions

-

Struct type definitions

-

Abstract type definitions

-

Alias type definitions

Type definitions may appear at the top level or inside a module definition. A type definition is an annotatable element.

6.1. Array Type Definitions

An array type definition associates a name with an array type. An array type describes the shape of an array value. It specifies an element type and a size.

6.1.1. Writing an Array Type Definition

As an example, here is an array type definition that associates

the name A with an array of three values, each of which is a 32-bit unsigned

integer:

array A = [3] U32In general, to write an array type definition, you write the following:

-

The keyword

array. -

The name of the array type.

-

An equals sign

=. -

An expression enclosed in square brackets

[…]denoting the size (number of elements) of the array. -

A type name denoting the element type. The available type names are discussed below.

Notice that the size expression precedes the element type, and the whole

type reads left to right.

For example, you may read the type [3] U32 as "array of 3 U32."

The size may be any legal expression. It doesn’t have to be a literal integer. For example:

constant numElements = 10

array A = [numElements] U326.1.2. Type Names

The following type names are available for the element types:

-

The type names

U8,U16,U32, andU64, denoting the type of unsigned integers of width 8, 16, 32, and 64 bits. -

The type names

I8,I16,I32, andI64, denoting the type of signed integers of width 8, 16, 32, and 64 bits. -

The type names

F32andF64, denoting the type of floating-point values of width 32 and 64 bits. -

The type name

bool, denoting the type of Boolean values (trueandfalse). -

The type name

string, denoting the type of string values. For example:type FwSizeStoreType = U16 constant FW_FIXED_LENGTH_STRING_SIZE = 256 # A is an array of 3 strings with size FW_FIXED_LENGTH_STRING_SIZE array A = [3] stringNotice that we defined the framework type

FwSizeStoreTypeand the framework constantFW_FIXED_LENGTH_STRING_SIZE. These definitions are required when using the typestring. Typically you provide them as part of the F Prime configuration.FwSizeStoreTypespecifies the type to use for storing the length of a string.FW_FIXED_LENGTH_STRING_SIZEspecifies the size of the buffer used to store string data. -

The type name

string sizee, where e is a numeric expression specifying the maximum string length of any value of that type. For example:type FwSizeStoreType = U16 # A is an array of 3 strings with size 40 array A = [3] string size 40Here we just specified

FwSizeStoreType. If a string type has an explicit size, then it does not use the definitionFW_FIXED_LENGTH_STRING_SIZE. -

A name associated with another type definition. In particular, an array definition may have another array definition as its element type; this situation is discussed further below.

An array type definition may not refer to itself (array type definitions are not recursive). For example, this definition is illegal:

array A = [3] A # Illegal: the definition of A may not refer to itself6.1.3. Default Values

Optionally, you can specify a default value for an array type.

To do this, you write the keyword default and an expression

that evaluates to an array value.

For example, here is an array type A with default value [ 1, 2, 3 ]:

array A = [3] U32 default [ 1, 2, 3 ]A default value expression need not be a literal array value; it can be any expression with the correct type. For example, you can create a named constant with an array value and use it multiple times, like this:

constant a = [ 1, 2, 3 ]

array A = [3] U8 default a # default value is [ 1, 2, 3 ]

array B = [3] U16 default a # default value is [ 1, 2, 3 ]If you don’t specify a default value, then the type gets an automatic default value,

consisting of the default value for each element.

The default numeric value is zero, the default Boolean value is false,

the default string value is "", and the default value of an array type

is specified in the type definition.

The type of the default expression must match the size and element type of the array, with type conversions allowed as discussed for array values. For example, this default expression is allowed, because we can convert integer values to floating-point values, and we can promote a single value to an array of three values:

array A = [3] F32 default 1 # default value is [ 1.0, 1.0, 1.0 ]However, these default expressions are not allowed:

array A = [3] U32 default [ 1, 2 ] # Error: size does not matcharray B = [3] U32 default [ "a", "b", "c" ] # Error: element type does not match6.1.4. Format Strings

You can specify an optional format string which says how to display each element value and optionally provides some surrounding text. For example, here is an array definition that interprets three integer values as wheel speeds measured in RPMs:

array WheelSpeeds = [3] U32 format "{} RPM"Then an element with value 100 would have the format 100 RPM.

Note that the format string specifies the format for an element, not the

entire array.

The way an entire array is displayed is implementation-specific.

A standard way is a comma-separated list enclosed in square brackets.

For example, a value [ 100, 200, 300 ] of type WheelSpeeds might

be displayed as [ 100 RPM, 200 RPM, 300 RPM ].

Or, since the format is the same for all elements, the implementation could

display the array as [ 100, 200, 300 ] RPM.

The special character sequence {} is called a replacement field; it says

where to put the value in the format text.

Each format string must have exactly one replacement field.

The following replacement fields are allowed:

-

The field

{}for displaying element values in their default format. -

The field

{c}for displaying a character value -

The field

{d}for displaying a decimal value -

The field

{x}for displaying a hexadecimal value -

The field

{o}for displaying an octal value -

The field

{e}for displaying a rational value in exponent notation, e.g.,1.234e2. -

The field

{f}for displaying a rational value in fixed-point notation, e.g.,123.4. -

The field

{g}for displaying a rational value in general format (fixed-point notation up to an implementation-dependent size and exponent notation for larger sizes).

For field types c, d, x, and o, the element type must be an integer

type.

For field types e, f, and g, the element type must be a floating-point

type.

For example, the following format string is illegal, because

type string is not an integer type:

array A = [3] string format "{d}" # Illegal: string is not an integer typeFor field types e, f, and g, you can optionally specify a precision

by writing a decimal point and an integer before the field type. For example,

the replacement field {.3f}, specifies fixed-point notation with a precision

of 3.

To include the literal character { in the formatted output, you can write

{{, and similarly for } and }}. For example, the following definition

array A = [3] U32 format "{{element {}}}"specifies a format string element {0} for element value 0.

No other use of { or } in a format string is allowed. For example, this is illegal:

array A = [3] U32 format "{" # Illegal use of { characterYou can include both a default value and a format; in this case, the default value must come first. For example:

array WheelSpeeds = [3] U32 default 100 format "{} RPM"If you don’t specify an element format, then each element is displayed

using the default format for its type.

Therefore, omitting the format string is equivalent to writing the format

string "{}".

6.1.5. Arrays of Arrays

An array type may have another array type as its element type. In this way you can construct an array of arrays. For example:

array A = [3] U32

array B = [3] A # An array of 3 A, which is an array of 3 U32When constructing an array of arrays, you may provide any legal default expression, so long as the types are compatible. For example:

array A = [2] U32 default 10 # default value is [ 10, 10 ]

array B1 = [2] A # default value is [ [ 10, 10 ], [ 10, 10 ] ]

array B2 = [2] A default 1 # default value is [ [ 1, 1 ], [ 1, 1 ] ]

array B3 = [2] A default [ 1, 2 ] # default value is [ [ 1, 1 ], [ 2, 2 ] ]

array B4 = [2] A default [ [ 1, 2 ], [ 3, 4 ] ]6.2. Struct Type Definitions

A struct type definition associates a name with a struct type. A struct type describes the shape of a struct value. It specifies a mapping from element names to their types. As discussed below, it also specifies a serialization order for the struct elements.

6.2.1. Writing a Struct Type Definition

As an example, here is a struct type definition that associates the name S with

a struct type containing two members: x of type U32, and y of type string:

type FwSizeStoreType = U16

constant FW_FIXED_LENGTH_STRING_SIZE = 256

struct S { x: U32, y: string }In general, to write a struct type definition, you write the following:

-

The keyword

struct. -

The name of the struct type.

-

A sequence of struct type members enclosed in curly braces

{…}.

A struct type member consists of a name, a colon, and a

type name,

for example x: U32.

The struct type members form an element sequence in which the optional terminating punctuation is a comma. As usual for element sequences, you can omit the comma and use a newline instead. So, for example, we can write the definition shown above in this alternate way:

type FwSizeStoreType = U16

constant FW_FIXED_LENGTH_STRING_SIZE = 256

struct S {

x: U32

y: string

}6.2.2. Annotating a Struct Type Definition

As noted in the beginning of this section, a type definition is an annotatable element, so you can attach pre and post annotations to it. A struct type member is also an annotatable element, so any struct type member can have pre and post annotations as well. Here is an example:

type FwSizeStoreType = U16

constant FW_FIXED_LENGTH_STRING_SIZE = 256

@ This is a pre annotation for struct S

struct S {

@ This is a pre annotation for member x

x: U32 @< This is a post annotation for member x

@ This is a pre annotation for member y

y: string @< This is a post annotation for member y

} @< This is a post annotation for struct S6.2.3. Default Values

You can specify an optional default value for a struct definition.

To do this, you write the keyword default and an expression

that evaluates to a struct

value.

For example, here is a struct type S with default value { x = 1, y = "abc"

}:

type FwSizeStoreType = U16

constant FW_FIXED_LENGTH_STRING_SIZE = 256

struct S { x: U32, y: string } default { x = 1, y = "abc" }A default value expression need not be a literal struct value; it can be any expression with the correct type. For example, you can create a named constant with a struct value and use it multiple times, like this:

type FwSizeStoreType = U16

constant FW_FIXED_LENGTH_STRING_SIZE = 256

constant s = { x = 1, y = "abc" }

struct S1 { x: U8, y: string } default s

struct S2 { x: U32, y: string } default sIf you don’t specify a default value, then the struct type gets an automatic default value, consisting of the default value for each member.

The type of the default expression must match the type of the struct, with type conversions allowed as discussed for struct values. For example, this default expression is allowed, because we can convert integer values to floating-point values, and we can promote a single value to a struct with numeric members:

struct S { x: F32, y: F32 } default 1 # default value is { x = 1.0, y = 1.0 }And this default expression is allowed, because if we omit a member of a struct, then FPP will fill in the member and give it the default value:

struct S { x: F32, y: F32 } default { x = 1 } # default value is { x = 1.0, y = 0.0 }However, these default expressions are not allowed:

type FwSizeStoreType = U16

constant FW_FIXED_LENGTH_STRING_SIZE = 256

struct S1 { x: U32, y: string } default { z = 1 } # Error: member z does not matchtype FwSizeStoreType = U16

constant FW_FIXED_LENGTH_STRING_SIZE = 256

struct S2 { x: U32, y: string } default { x = "abc" } # Error: type of member x does not match6.2.4. Member Arrays

For any struct member, you can specify that the member is an array of elements. To do this you, write an array the size enclosed in square brackets before the member type. For example:

struct S {

x: [3] U32

}This definition says that struct S has one element x,

which is an array consisting of three U32 values.

We call this array a member array.

Member arrays vs. array types: Member arrays let you include an array of elements as a member of a struct type, without defining a separate named array type. Also, member arrays generate less code than named arrays. Whereas a member size array is a native C++ array, each named array is a C++ class.

On the other hand, defining a named array is usually a good choice when

-

You want to use the array outside of any structure.

-

You want the convenience of a generated array class, which has a richer interface than the bare C++ array.

In particular, the generated array class provides bounds-checked access operations: it causes a runtime failure if an out-of-bounds access occurs. The bounds checking provides an additional degree of memory safety when accessing array elements.

Member arrays and default values: FPP ignores member array sizes when checking the types of default values. For example, this code is accepted:

struct S {

x: [3] U32

} default { x = 10 }The member x of the struct S gets three copies of the value

10 specified for x in the default value expression.

6.2.5. Member Format Strings

For any struct member, you can include an optional format.

To do this, write the keyword format and a format string.

The format string for a struct member has the same form as for an

array member.

For example, the following struct definition specifies

that member x should be displayed as a hexadecimal value:

type FwSizeStoreType = U16

constant FW_FIXED_LENGTH_STRING_SIZE = 256

struct Channel {

name: string

offset: U32 format "offset 0x{x}"

}How the entire struct is displayed depends on the implementation.

As an example, the value of S with name = "momentum" and offset = 1024

might look like this when displayed:

Channel { name = "momentum", offset = 0x400 }

If you don’t specify a format for a struct member, then the system uses the default format for the type of that member.

If the member has a size greater than one, then the format is applied to each element. For example:

struct Telemetry {

velocity: [3] F32 format "{} m/s"

}The format string is applied to each of the three

elements of the member velocity.

6.2.6. Struct Types Containing Named Types

A struct type may have an array or struct type as a member type. In this way you can define a struct that has arrays or structs as members. For example:

type FwSizeStoreType = U16

constant FW_FIXED_LENGTH_STRING_SIZE = 256

array Speeds = [3] U32

# Member speeds has type Speeds, which is an array of 3 U32 values

struct Wheel { name: string, speeds: Speeds }When initializing a struct, you may provide any legal default expression, so long as the types are compatible. For example:

type FwSizeStoreType = U16

constant FW_FIXED_LENGTH_STRING_SIZE = 256

array A = [2] U32

struct S1 { x: U32, y: string }

# default value is { s1 = { x = 0, y = "" }, a = [ 0, 0 ] }

struct S2 { s1: S1, a: A }

# default value is { s1 = { x = 0, y = "abc" }, a = [ 5, 5 ] }

struct S3 { s1: S1, a: A } default { s1 = { y = "abc" }, a = 5 }6.2.7. The Order of Members

For struct values,

we said that the order in which the members appear in the value is not

significant.

For example, the expressions { x = 1, y = 2 } and { y = 2, x = 1 } denote

the same value.

For struct types, the rule is different.

The order in which the members appear is significant, because

it governs the order in which the members appear in the generated

code.

For example, the type struct S1 { x: U32, y : string } might generate a C++

class S1 with members x and y laid out with x first; while struct S2

{ y : string, x : U32 }

might generate a C++ class S2 with members x and y laid out with y

first.

Since class members are generally serialized in the order in which they appear in

the class,

the members of S1 would be serialized with x first, and the members of

S2

would be serialized with y first.

Serializing S1 to data and then trying to deserialize it to S2 would

produce garbage.

The order matters only for purposes of defining the type, not for assigning default values to it. For example, this code is legal:

type FwSizeStoreType = U16

constant FW_FIXED_LENGTH_STRING_SIZE = 256

struct S { x: U32, y: string } default { y = "abc", x = 5 }FPP struct values have no inherent order associated with their members. However, once those values are assigned to a named struct type, the order becomes fixed.

6.3. Abstract Type Definitions

An array or struct type definition specifies a complete type: in addition to the name of the type, it provides the names and types of all the members. An abstract type, by contrast, has an incomplete or opaque definition. It provides only a name N. Its purpose is to tell the analyzer that a type with name N exists and will be defined elsewhere. For example, if the target language is C++, then the type is a C++ class.

To define an abstract type, you write the keyword type followed

by the name of the type.

For example, you can define an abstract type T; then you can construct

an array A with member type T:

type T # T is an abstract type

array A = [3] T # A is an array of 3 values of type TThis code says the following:

-

A type

Texists. It is defined in the implementation, but not in the model. -

Ais an array of three values, each of typeT.

Now suppose that the target language is C++. Then the following happens when generating code:

-

The definition

type Tdoes not cause any code to be generated. -

The definition

array A =… causes a C++ classAto be generated. By F Prime convention, the generated files areAArrayAc.hppandAArrayAc.cpp. -

File

AArrayAc.hppincludes a header fileT.hpp.

It is up to the user to implement a C++ class T with

a header file T.hpp.

This header file must define T in a way that is compatible

with the way that T is used in A.

We will have more to say about this topic in the section on

implementing abstract types.

In general, an abstract type T is opaque in the FPP model

and has no values that are expressible in the model.

Thus, every use of an abstract type T represents the default value

for T.

The implementation of T in the target language

provides the default value.

In particular, when the target language is C++, the default

value is the zero-argument constructor T().

6.4. Alias Type Definitions

An alias type definition provides an alternate name for

a type that is defined elsewhere.

The alternate name is called an alias of the original type.

For example, here is an alias type definition specifying that the type

T is an alias of the type U32:

type T = U32Wherever this definition is available, the type T may be used

interchangeably with the type U32.

For example:

type T = U32

array A = [3] TAn alias type definition may refer to any type, including another

alias type, except that it may not refer to itself, either directly or through

another alias.

For example, here is an alias type definition specifying that the type

T is an alias of the struct type S:

struct S { x: U32, y: I32 }

type T = SHere is a pair of definitions specifying that S is an alias of T,

and T is an alias of F32:

type S = T

type T = F32Here is a pair of alias type definitions that is illegal, because each of the definitions indirectly refers to itself:

type S = T

type T = SAlias type definitions are useful for specifying configurations.

Part of a model can use a type T, without

defining T; elsewhere, configuration code can define

T to be the alias of another type.

F Prime uses this method to provide basic type whose definitions

configure the framework.

These types are defined in the file config/FpConfig.fpp.

6.5. Framework Types

Certain types defined in FPP have a special meaning in the F Prime

framework.

These types are called framework types.

For example, the type FwOpcodeType defines the type of

a command opcode.

(Commands are an F Prime feature that we describe

in a later section of this manual.)

Framework types are like framework constants: you typically define them by overriding configuration files provided by F Prime, and if they are defined, then they must conform to certain rules. The FPP Language Specification has all the details.

7. Defining Enums

An FPP model may contain one or more enum definitions. Enum is short for enumeration. An FPP enum is similar to an enum in C or C++. It defines a named type called an enum type and a set of named constants called enumerated constants. The enumerated constants are the values associated with the type.

An enum definition may appear at the top level or inside a module definition. An enum definition is an annotatable element.

7.1. Writing an Enum Definition

Here is an example:

enum Decision {

YES

NO

MAYBE

}This code defines an enum type Decision with three

enumerated constants: YES, NO, and MAYBE.

In general, to write an enum definition, you write the following:

-

The keyword

enum. -

The name of the enum.

-

A sequence of enumerated constants enclosed in curly braces

{…}.

The enumerated constants form an element sequence in which the optional terminating punctuation is a comma. For example, this definition is equivalent to the one above:

enum Decision { YES, NO, MAYBE }There must be at least one enumerated constant.

7.2. Using an Enum Definition

Once you have defined an enum, you can use the enum as a type and the enumerated constants as constants of that type. The name of each enumerated constant is qualified by the enum name. Here is an example:

enum State { ON, OFF }

constant initialState = State.OFFThe constant s has type State and value State.ON.

Here is another example:

enum Decision { YES, NO, MAYBE }

array Decisions = [3] Decision default Decision.MAYBEHere we have used the enum type as the type of the array member,

and we have used the value Decision.MAYBE as the default

value of an array member.

7.3. Numeric Values

As in C and C++, each enumerated constant has an associated

numeric value.

By default, the values start at zero and go up by one.

For example, in the enum Decision defined above,

YES has value 0, NO has value 1, and MAYBE has value 2.

You can optionally assign explicit values to the enumerated constants. To do this, you write an equals sign and an expression after each of the constant definitions. Here is an example:

enum E { A = 1, B = 2, C = 3 }This definition creates an enum type E with three enumerated constants E.A,

E.B, and E.C. The constants have 1, 2, and 3 as their associated numeric

values.

If you provide an explicit numeric value for any of the enumerated constants, then you must do so for all of them. For example, this code is not allowed:

# Error: cannot provide a value for just one enumerated constant

enum E { A = 1, B, C }Further, the values must be distinct.

For example, this code is not allowed, because

the enumerated constants A and B both have the value 2:

# Error: enumerated constant values must be distinct

enum E { A = 2, B = 1 + 1 }You may convert an enumerated constant to its associated numeric value. For example, this code is allowed:

enum E { A = 5 }

constant c = E.A + 1The constant c has the value 6.

However, you may not convert a numeric value to an enumerated constant. This is for type safety reasons: a value of enumeration type should have one of the numeric values specified in the type. Assigning an arbitrary number to an enum type would violate this rule.

For example, this code is not allowed:

enum E { A, B, C }

# Error: cannot assign integer 10 to type E

array A = [3] E default 107.4. The Representation Type

Each enum definition has an associated representation type. This is the primitive integer type used to represent the numeric values associated with the enumerated constants when generating code.

If you don’t specify a representation type, then the default

type is I32.

For example, in the enumerations defined in the previous sections,

the representation type is I32.

To specify an explicit representation type, you write it after

the enum name, separated from the name by a colon, like this:

enum Small : U8 { A, B, C }This code defines an enum Small with three enumerated constants

Small.A, Small.B, and Small.C.

Each of the enumerated constants is represented as a U8 value

in C++.

7.5. The Default Value

Every type in FPP has an associated default value. For enum types, if you don’t specify a default value explicitly, then the default value is the first enumerated constant in the definition. For example, given this definition

enum Decision { YES, NO, MAYBE }the default value for the type Decision is Decision.YES.

That may be too permissive, say if Decision represents

a decision on a bank loan.

Perhaps the default value should be Decision.MAYBE.

To specify an explicit default value, write the keyword default

and the enumerated constant after the enumerated constant

definitions, like this:

enum Decision { YES, NO, MAYBE } default MAYBENotice that when using the constant MAYBE as a default value, we

don’t need to qualify it with the enum name, because the

use appears inside the enum where it is defined.

8. Dictionary Definitions

One of the artifacts generated from an FPP model is a dictionary that tells the ground data system how to interpret and display the data produced by the FSW. By default, the dictionary contains representations of the following types and constants defined in FPP:

-

Types and constants that are known to the framework and that are needed by every dictionary, e.g.,

FwOpcodeType. -

Types and constants that appear in the definitions of the data produced by the FSW, e.g., event specifiers or telemetry specifiers. (In later sections of this manual we will explain how to define event reports and telemetry channels.)

Sometimes you will need the dictionary to include the definition of a type or constant that does not satisfy either of these conditions. For example, a downlink configuration parameter may be shared by the FSW implementation and the GDS and may be otherwise unused in the FPP model.

In this case you can mark a type or constant definition as a dictionary definition. A dictionary definition tells the FPP analyzer that whenever a dictionary is generated from the model, the definition should be included in the dictionary.

To write a dictionary definition, you write the keyword dictionary

before the definition.

You can do this for a constant definition, a type definition,

or an enum definition.

For example:

dictionary constant a = 1

dictionary array A = [3] U32

dictionary struct S { x: U32, y: F32 }

dictionary type T = S

dictionary enum E { A, B }Each dictionary definition must observe the following rules:

-

A dictionary constant definition must have a numeric value (integer or floating point), a Boolean value (true or false), a string value such as

"abc", or an enumerated constant value such asE.A. -

A dictionary type definition must define a displayable type, i.e., a type that the F Prime ground data system knows how to display. For example, the type may not be an abstract type. Nor may it be an array or struct type that has an abstract type as a member type.

For example, the following dictionary definitions are invalid:

dictionary constant a = { x = 1, y = 2.0 } # Error: a has struct type

dictionary type T # Error: T is an abstract type9. Defining Ports

A port definition defines an F Prime port. In F Prime, a port specifies the endpoint of a connection between two component instances. Components are the basic units of FSW function in F Prime and are described in the next section. A port definition specifies (1) the name of the port, (2) the type of the data carried on the port, and (3) an optional return type.

9.1. Port Names

The simplest port definition consists of the keyword port followed

by a name.

For example:

port PThis code defines a port named P that carries no data and returns

no data.

This kind of port can be useful for sending or receiving a triggering event.

9.2. Formal Parameters

More often, a port will carry data. To specify the data, you write formal parameters enclosed in parentheses. The formal parameters of a port definition are similar to the formal parameters of a function in a programming language: each one has a name and a type, and you may write zero or more of them. For example:

type FwSizeStoreType = U16

constant FW_FIXED_LENGTH_STRING_SIZE = 256

port P1() # Zero parameters; equivalent to port P1

port P2(a: U32) # One parameter

port P3(a: I32, b: F32, c: string) # Three parametersThe type of a formal parameter may be any valid type, including an

array type, a struct type, an enum type, or an abstract type.

For example, here is some code that defines an enum type E and

and abstract type T, and then uses those types in the

formal parameters of a port:

enum E { A, B }

type T

port P(e: E, t: T)The formal parameters form an element sequence in which the optional terminating punctuation is a comma. As usual for element sequences, you can omit the comma and use a newline instead. So, for example, we can write the definition shown above in this alternate way:

enum E { A, B }

type T

port P(

e: E

t: T

)9.3. Handler Functions

As discussed further in the sections on

defining components

and

instantiating components,

when constructing an F Prime application, you

instantiate port definitions as output ports and

input ports of component instances.

Output ports are connected to input ports.

For each output port pOut of a component instance c1,

there is a corresponding auto-generated function that the

implementation of c1 can call in order to invoke pOut.

If pOut is connected to an input

port pIn of component instance c2, then invoking pOut runs a

handler function pIn_handler associated with pIn.

The handler function is part of the implementation of the component

C2 that c2 instantiates.

In this way c1 can send data to c2 or request

that c2 take some action.

Each input port may be synchronous or asynchronous.

A synchronous invocation directly calls a handler function.

An asynchronous invocation calls a short function that puts

a message on a queue for later dispatch.

Dispatching the message calls the handler function.

Translating handler functions:

In FPP, each output port pOut or input port pIn has a port type.

This port type refers to an FPP port definition P.

In the C++ translation, the signature of a handler function

pIn_handler for pIn

is derived from P.

In particular, the C++ formal parameters of pIn_handler

correspond to the

FPP formal parameters of P.

When generating the handler function pIn_handler, F

Prime translates each formal parameter p of P in the following way:

-

If p carries a primitive value, then p is translated to a C++ value parameter.

-

Otherwise p is translated to a C++

constreference parameter.

As an example, suppose that P looks like this:

type T

port P(a: U32, b: T)Then the signature of pIn_handler might look like this:

virtual void pIn_handler(U32 a, const T& b);Calling handler functions:

Suppose again that output port pOut of component instance c1

is connected to input port pIn of component instance c2.

Suppose that the implementation of c1 invokes pOut.

What happens next depends on whether pIn is synchronous

or asynchronous.

If pIn is synchronous, then the invocation is a direct

call of the pIn handler function.

Any value parameter is passed by copying the value on

the stack.

Any const reference parameter provides a reference to

the data passed in by c1 at the point of invocation.

For example, if pIn has the port type P shown above,

then the implementation of pIn_handler might look like this:

// Assume pIn is a synchronous input port

void C2::pIn_handler(U32 a, const T& b) {

// a is a local copy of a U32 value

// b is a const reference to T data passed in by c1

}Usually the const reference is what you want, for efficiency reasons.

If you want a local copy of the data, you can make one.

For example:

// Copy b into b1

auto b1 = bNow b1 has the same data that the parameter b would have

if it were passed by value.

If pIn is asynchronous, then the invocation does not

call the handler directly. Instead, it calls

a function that puts a message on a queue.

The handler is called when the message is dispatched.

At this point, any value parameter is passed by

copying the value out of the queue and onto the stack.

Any const reference parameter is passed by

(1) copying data out of the queue and onto the stack and

(2) then providing a const reference to the data on the stack.

For example:

// Assume pIn is an asynchronous input port

void C2::pIn_handler(U32 a, const T& b) {

// a is a local copy of a U32 value

// b is a const reference to T data copied across the queue

// and owned by this component

}Note that unlike in the synchronous case, const references in parameters refer to data owned by the handler (residing on the handler stack), not data owned by the invoking component. Note also that the values must be small enough to permit placement on the queue and on the stack.

If you want the handler and the invoking component to share data

passed in as a parameter, or if the data values are too large

for the queue and the stack, then you can use a data structure

that contains a pointer or a reference as a member.

For example, T could have a member that stores a reference

or a pointer to shared data.

F Prime provides a type Fw::Buffer that stores a

pointer to a shared data buffer.

Passing string arguments:

Suppose that an input port pIn of a component instance c

has port type P.

If P has a formal parameter of type string,

the corresponding formal parameter in the handler for pIn is

a constant reference to Fw::StringBase, which is an abstract

supertype of all F Prime string types.

The string object referred to depends on whether pIn is synchronous

or asynchronous:

-

If

pInis synchronous, then the reference is to the string object used in the invocation. In this case the size specified in thestringtype is ignored. For example, if a string of length 80 is bound to a port parameter whose type isstring size 40, the reference is to the original string of length 80. -

If

pInis asynchronous, then the reference is to a string object on the stack whose size is bounded by the size named in the port parameter. For example, if a string of length 80 is bound to a port parameter whose type isstring size 40, then the reference is to a string consisting of the first 40 characters of the original string.

9.4. Reference Parameters

You may write the keyword ref in front of any formal parameter p

of a port definition.

Doing this specifies that p is a reference parameter.

Each reference parameter in an FPP port becomes a mutable

C++ reference at the corresponding place in the

handler function signature.

For example, suppose this port definition

type T

port P(a: U32, b: T, ref c: T)appears as the type of an input port pIn of component C.

The generated code for C might contain a handler function with a

signature like this:

virtual void pIn_handler(U32 a, const T& b, T& c);Notice that parameter b is not marked ref, so it is

translated to const T& b, as discussed in the previous section.

On the other hand, parameter c is marked ref, so it

is translated to T& c.

Apart from the mutability, a reference parameter has the same

behavior as a const reference parameter, as described in

the previous section.

In particular:

-

When

pInis synchronous, a reference parameter p ofpIn_handlerrefers to the data passed in by the invoking component. -

When

pInis asynchronous, a reference parameter p ofpIn_handlerrefers to data copied out of the queue and placed on the local stack.

The main reason to use a reference parameter is to return a value to the sender by storing it through the reference. We discuss this pattern in the section on returning values.

9.5. Returning Values

Optionally, you can give a port definition a return type.

To do this you write an arrow -> and a type

after the name and the formal parameters, if any.

For example:

type T

port P1 -> U32 # No parameters, returns U32

port P2(a: U32, b: F32) -> T # Two parameters, returns TInvoking a port with a return type is like calling a function with a return value. Such a port may be used only in a synchronous context (i.e., as a direct function call, not as a message placed on a concurrent queue).

In a synchronous context only, ref parameters provide another way to return

values on the port,

by assigning to the reference, instead of executing a C++ return statement.

As an example, consider the following two port definitions:

type T

port P1 -> T

port P2(ref t: T)The similarities and differences are as follows:

-

Both

P1andP2must be used in a synchronous context, because each returns aTvalue. -

In the generated C++ code,

-

The function for invoking

P1has no arguments and returns aTvalue. A handler associated withP1returns a value of typeTvia the C++returnstatement. For example:T C::p1In_handler() { ... return T(1, 2, 3); } -

The function for invoking

P1has one argumenttof typeT&. A handler associated withP2returns a value of typeTby updating the referencet(assigning to it, or updating its fields). For example:void C::p2In_handler(T& t) { ... t = T(1, 2, 3); }

-

The second way may involve less copying of data.

Finally, there can be any number of reference parameters,

but at most one return value.

So if you need to return multiple values on a port, then reference

parameters can be useful.

As an example, the following port attempts to update a result

value of type U32.

It does this via reference parameter.

It also returns a status value indicating whether the update

was successful.

enum Status { SUCCEED, FAIL }

port P(ref result: U32) -> StatusA handler for P might look like this:

Status C::pIn_handler(U32& result) {

Status status = Status::FAIL;

if (...) {

...

result = ...

status = Status::SUCCEED;

}

return status;

}

9.6. Pass-by-Reference Semantics

Whenever a C++ formal parameter p enables sharing of data between

an invoking component and a handler function pIn_handler,

we say that p has pass-by-reference semantics.

Pass-by-reference semantics occurs in the following cases:

-

p has reference or

constreference type, and the portpInis synchronous. -

p has a type T that contains a pointer or a reference as a member.

When using pass-by-reference semantics, you must carefully manage the use of the data to avoid concurrency bugs such as data races. This is especially true for references that can modify shared data.

Except in special cases that require special expertise (e.g.,

the implementation of highly concurrent data structures),

you should enforce the rule that at most

one component may use any piece of data at any time.

In particular, if component A passes a reference to component B,

then component A should not use the reference while

component B is using it, and vice versa.

For example:

-

Suppose component

Aowns some dataDand passes a reference toDvia a synchronous port call to componentB. Suppose the port handler in componentBuses the data but does not store the reference, so that when the handler exits, the reference is lost. This is a good pattern. In this case, we may say that ownership ofDresides inA, temporarily goes toBfor the life of the handler, and goes back toAwhen the handler exits. Because the port call is synchronous, the handler inBnever runs concurrently with any code inAthat usesD. So at most one ofAorBusesDat any time. -

Suppose instead that the handler in

Bstores the reference into a member variable, so that the reference persists after the handler exits. If this happens, then you should make sure thatAcannot useDunless and untilBpasses ownership ofDtoAand vice versa. For example, you could use state variables of enum type inAand inBto track ownership, and you could have a port invocation fromAtoBpass the reference and transfer ownership fromAtoBand vice versa.

9.7. Annotating a Port Definition

A port definition is an annotatable element. Each formal parameter is also an annotatable element. Here is an example:

@ Pre annotation for port P

port P(

@ Pre annotation for parameter a

a: U32

@ Pre annotation for parameter b

b: F32

)10. Defining State Machines

A hierarchical state machine (state machine for short) is a software subsystem that specifies the following:

-

A set of states that the system can be in. The states can be arranged in a hierarchy (i.e., states may have substates).

-

A set of transitions from one state to another that occur under specified conditions.

State machines are important in embedded programming. For example, F Prime components often have a concept of state that changes as the system runs, and it is useful to model these state changes as a state machine.

In FPP there are two ways to define a state machine:

-

An external state machine definition is like an abstract type definition: it tells the analyzer that a state machine exists with a specified name, but it says nothing about the state machine behavior. An external tool must provide the state machine implementation.

-

An internal state machine definition is like an array type definition or struct type definition: it provides a complete specification in FPP of the state machine behavior. The FPP back end uses this specification to generate code; no external tool is required.

The following subsections describe both kinds of state machine definitions.

State machine definitions may appear at the top level or inside a module definition. A state machine definition is an annotatable element. Once you define a state machine, you can instantiate it as part of a component. The component can then send the signals that cause the state transitions. The component also provides the functions that are called when the state machine does actions and evaluates guards.

10.1. Writing a State Machine Definition

External state machines:

To define an external state machine, you write the keywords

state machine followed by an identifier, which is the

name of the state machine:

state machine MThis code defines an external state machine with name M.

When you define an external state machine M, you must provide

an implementation for M, as discussed in the section

on implementing external state machines.

The external implementation must have a header file M.hpp

located in the same directory as the FPP file where

the state machine M is defined.

Internal state machines: In the following subsections, we explain how to define internal state machines in FPP. The behavior of these state machines closely follows the behavior described for state machines in the Universal Modeling Language (UML). UML is a graphical language and FPP is a textual language, but each of the concepts in the FPP language is motivated by a corresponding UML concept. FPP does not represent every aspect of UML state machines: because our goal is to support embedded and flight software, we focus on a subset of UML state machine behavior that is (1) simple and unambiguous and (2) sufficient for embedded applications that use F Prime.

In this manual, we focus on the syntax and high-level behavior of FPP state machines. For more details about the C++ code generation for state machines, see the F Prime design documentation.

10.2. States, Signals, and Transitions

In this and the following sections, we explain how to define internal state machines, i.e., state machines that are fully specified in FPP. In these sections, when we say “state machine,” we mean an internal state machine.

First we explain the concepts of states, signals, and transitions. These are the basic building blocks of FPP state machines.

Basic state machines: The simplest state machine M that has any useful behavior consists of the following:

-

Several state definitions. These define the states of M.

-

An initial transition specifier. This specifies the state that an instance of M is in when it starts up.

-

One or more signal definitions. The external environment (typically an F Prime component) sends signals to instances of M.

-

One or more state transition specifiers. These tell each instance of M what to do when it receives a signal.

Here is an example:

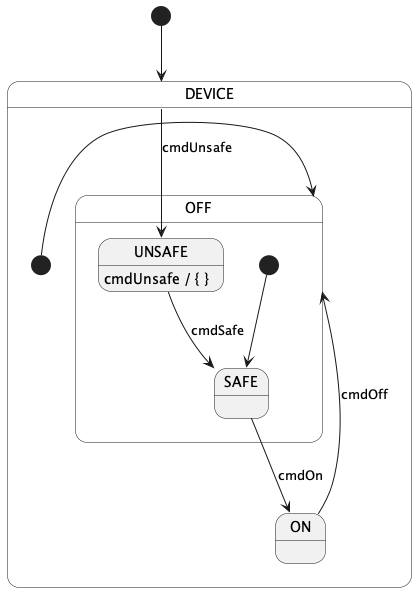

@ A state machine representing a device with on-off behavior

state machine Device {

@ A signal for turning the device on

signal cmdOn

@ A signal for turning the device off

signal cmdOff

@ The initial state is OFF

initial enter OFF

@ The ON state

state ON {

@ In the ON state, a cmdOff signal causes a transition to the OFF state

on cmdOff enter OFF

}

@ The OFF state

state OFF {

@ In the OFF state, a cmdOff signal causes a transition to the ON state

on cmdOn enter ON

}

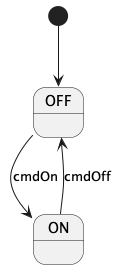

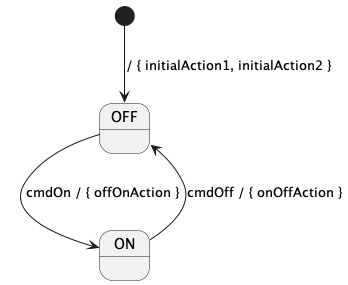

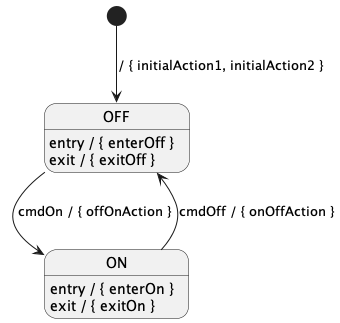

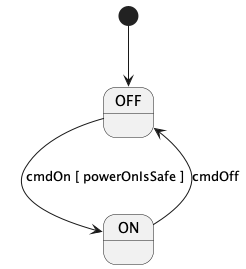

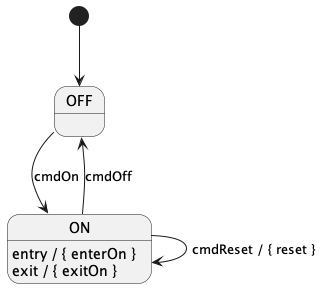

}Here is the example represented graphically, as a UML state machine:

This example defines a state machine Device that represents

a device with on-off behavior.

There are two states, ON and OFF.

The initial transition specifier initial enter OFF

says that the state machine is in state OFF when it starts up.

There are two signals: cmdOn for turning the device

on and cmdOff for turning the device off.

There are two state transition specifiers:

-

A specifier that says to enter state

OFFon receiving thecmdOffsignal in stateON. -

A specifier that says to enter state

ONon receiving thecmdOnsignal in stateOFF.

In general, a state transition specifier causes a transition

from the state S in which it appears to the state S' that